Transformation Services

We are a Digital Transformation Consultancy offering Transformation Services to small and medium businesses. We enable our clients to unlock their full potential by implementing cutting-edge digital solutions.

Fractional Consulting is a dynamic, cost-effective service where experienced consultants offer strategic guidance and expertise on a part-time or as-needed basis.

This approach provides the flexibility to access top-tier digital and analysis expertise without the commitment of a full-time position. It ensures that companies of all sizes can successfully advance their digital initiatives.

Our team of experienced consultants specialise in providing Digital Transformation Consulting services to streamline processes, enhance efficiency, and drive positive change.

With a deep understanding of business operations and a wealth of technical expertise, we empower organisations to leverage cutting-edge technologies such as AI, Process Mining, Cloud Computing, Web3, Process Automation and more.

Explore our range of Consultancy Services and discover how we can help your business thrive in the digital era.

HOW WE CAN HELP

At EfficiencyAI, we combine our technical expertise with a deep understanding of business operations to deliver strategic consultancy services that drive efficiency, innovation, and growth.

Let us be your trusted partner in navigating the complexities of the digital landscape and unlocking the full potential of technology for your organisation.

Latest Content

Explore our collection of insightful business transformation and technology articles on the latest topics. We also have a dedicated section on AI Regulation.

- AI Backlash and Regulation – Potential Causes

AI continues to leave an indelible mark on society and various sectors, drawing avid interest from tech enthusiasts and the public. Nevertheless, AI’s integration into… Read more: AI Backlash and Regulation – Potential Causes

AI continues to leave an indelible mark on society and various sectors, drawing avid interest from tech enthusiasts and the public. Nevertheless, AI’s integration into… Read more: AI Backlash and Regulation – Potential Causes - AI in Education and Teaching

The world of education is currently experiencing a momentous transformation as generative artificial intelligence (AI) technologies rapidly advance. This wave of technological innovation has prompted… Read more: AI in Education and Teaching

The world of education is currently experiencing a momentous transformation as generative artificial intelligence (AI) technologies rapidly advance. This wave of technological innovation has prompted… Read more: AI in Education and Teaching - Agentic AI Systems

Artificial intelligence (AI) has made remarkable strides in recent years. Businesses are swiftly realising the benefits of automation in their operations. However, what we see… Read more: Agentic AI Systems

Artificial intelligence (AI) has made remarkable strides in recent years. Businesses are swiftly realising the benefits of automation in their operations. However, what we see… Read more: Agentic AI Systems - AI Skills Training Services

Harnessing the power of Artificial Intelligence (AI) has become increasingly crucial. From simplifying business processes to drastically altering customer experiences, to say AI has become… Read more: AI Skills Training Services

Harnessing the power of Artificial Intelligence (AI) has become increasingly crucial. From simplifying business processes to drastically altering customer experiences, to say AI has become… Read more: AI Skills Training Services - Future-Proofing Careers: AI and Staff Development

Augmenting Staff Abilities, Not Substituting Them Artificial Intelligence (AI) has recently proven to be a transformative technology that radically alters the business environment. Despite its… Read more: Future-Proofing Careers: AI and Staff Development

Augmenting Staff Abilities, Not Substituting Them Artificial Intelligence (AI) has recently proven to be a transformative technology that radically alters the business environment. Despite its… Read more: Future-Proofing Careers: AI and Staff Development - Project Stargate AI Supercomputer – OpenAI and Microsoft

Microsoft and OpenAI Announce Plans for The Stargate Project Microsoft and OpenAI, the technology giants, recently announced their plans for an ambitious data centre initiative,… Read more: Project Stargate AI Supercomputer – OpenAI and Microsoft

Microsoft and OpenAI Announce Plans for The Stargate Project Microsoft and OpenAI, the technology giants, recently announced their plans for an ambitious data centre initiative,… Read more: Project Stargate AI Supercomputer – OpenAI and Microsoft - AI Adoption Strategy

As industries become increasingly digital, Artificial Intelligence (AI) has steadily moved into the limelight of business operations. Touted as one of our most seminal technology… Read more: AI Adoption Strategy

As industries become increasingly digital, Artificial Intelligence (AI) has steadily moved into the limelight of business operations. Touted as one of our most seminal technology… Read more: AI Adoption Strategy - AI and Digital Transformation

Artificial intelligence (AI) and digital transformation (DX) have shaped an integrative relationship in the contemporary digital world. Their relationship reflects how the operations of these… Read more: AI and Digital Transformation

Artificial intelligence (AI) and digital transformation (DX) have shaped an integrative relationship in the contemporary digital world. Their relationship reflects how the operations of these… Read more: AI and Digital Transformation - Using AI in the Recruitment Process Guidelines

Embracing AI While Managing Potential Risks The Department for Science, Innovation and Technology has recently released the Responsible AI in Recruitment guide for organisations to… Read more: Using AI in the Recruitment Process Guidelines

Embracing AI While Managing Potential Risks The Department for Science, Innovation and Technology has recently released the Responsible AI in Recruitment guide for organisations to… Read more: Using AI in the Recruitment Process Guidelines - Need for Legal Action Against AI Use in Terrorist Recruiting

The Call for Revised Legislation The Institute for Strategic Dialogue (ISD), a London-based counter-extremism think tank, has issued a compelling call for swiftly implementing new… Read more: Need for Legal Action Against AI Use in Terrorist Recruiting

The Call for Revised Legislation The Institute for Strategic Dialogue (ISD), a London-based counter-extremism think tank, has issued a compelling call for swiftly implementing new… Read more: Need for Legal Action Against AI Use in Terrorist Recruiting - AI’s Potential Effect on UK Employment

AI Adoption and the Job Market Research Recent research that explores the effects of generative artificial intelligence (AI) on the UK’s employment market has displayed… Read more: AI’s Potential Effect on UK Employment

AI Adoption and the Job Market Research Recent research that explores the effects of generative artificial intelligence (AI) on the UK’s employment market has displayed… Read more: AI’s Potential Effect on UK Employment - AI and the Digital Economy

The Digital Economy and the AI Revolution Eclipsing traditional economic activities, the burgeoning digital economy serves as a thriving marriage of digital computing technology and… Read more: AI and the Digital Economy

The Digital Economy and the AI Revolution Eclipsing traditional economic activities, the burgeoning digital economy serves as a thriving marriage of digital computing technology and… Read more: AI and the Digital Economy - Global AI Regulation

Understanding Global AI Regulation Countless cases have shown that artificial intelligence (AI) is revolutionising many traditional sectors. With this evolution comes the issue of regulating… Read more: Global AI Regulation

Understanding Global AI Regulation Countless cases have shown that artificial intelligence (AI) is revolutionising many traditional sectors. With this evolution comes the issue of regulating… Read more: Global AI Regulation - AI and Workplace Health and Safety

Artificial intelligence (AI) is weaving its magic across several areas, cautiously treading into workplace safety and health. It is transforming this domain in myriad ways.… Read more: AI and Workplace Health and Safety

Artificial intelligence (AI) is weaving its magic across several areas, cautiously treading into workplace safety and health. It is transforming this domain in myriad ways.… Read more: AI and Workplace Health and Safety - AI in General and Mental Healthcare

The rise of AI over the last ten years has led to significant transformations across many professional fields. From autonomous robots efficiently delivering groceries to… Read more: AI in General and Mental Healthcare

The rise of AI over the last ten years has led to significant transformations across many professional fields. From autonomous robots efficiently delivering groceries to… Read more: AI in General and Mental Healthcare - AI, Virtual Worlds and the Digital Economy

The dynamic combination of Artificial Intelligence (AI) and Virtual Worlds (VW) is fast becoming the lifeblood of the digital economy. As these technologies evolve, they… Read more: AI, Virtual Worlds and the Digital Economy

The dynamic combination of Artificial Intelligence (AI) and Virtual Worlds (VW) is fast becoming the lifeblood of the digital economy. As these technologies evolve, they… Read more: AI, Virtual Worlds and the Digital Economy - AI Use in English Courts

A Historic Leap into the Future The English legal system – infused with historic traditions and precedents spanning over a thousand years – is now… Read more: AI Use in English Courts

A Historic Leap into the Future The English legal system – infused with historic traditions and precedents spanning over a thousand years – is now… Read more: AI Use in English Courts - Synthetic Data in the UK Financial Sector

Synthetic data is radically changing the financial sector’s operations in the UK. The Financial Conduct Authority (FCA) Synthetic Data Expert Group (SDEG) has contributed significantly… Read more: Synthetic Data in the UK Financial Sector

Synthetic data is radically changing the financial sector’s operations in the UK. The Financial Conduct Authority (FCA) Synthetic Data Expert Group (SDEG) has contributed significantly… Read more: Synthetic Data in the UK Financial Sector - Safeguarding Artists from AI with Tennessee’s ELVIS Act

Addressing the Artist’s Dilemma in the AI World AI is rapidly reshaping humanity, permeating every facet of life from healthcare to transportation and not leaving… Read more: Safeguarding Artists from AI with Tennessee’s ELVIS Act

Addressing the Artist’s Dilemma in the AI World AI is rapidly reshaping humanity, permeating every facet of life from healthcare to transportation and not leaving… Read more: Safeguarding Artists from AI with Tennessee’s ELVIS Act - Artificial Intelligence in the UK Government

The UK government is actively focused on fostering a secure and ethical space for the comprehensive adoption and utilisation of artificial intelligence (AI). The government… Read more: Artificial Intelligence in the UK Government

The UK government is actively focused on fostering a secure and ethical space for the comprehensive adoption and utilisation of artificial intelligence (AI). The government… Read more: Artificial Intelligence in the UK Government - BBC Looking at Developing AI LLM

BBC Exploring Artificial Intelligence (AI) in Journalism As one of the United Kingdom’s principal public broadcasting entities, the British Broadcasting Corporation (BBC) has made its… Read more: BBC Looking at Developing AI LLM

BBC Exploring Artificial Intelligence (AI) in Journalism As one of the United Kingdom’s principal public broadcasting entities, the British Broadcasting Corporation (BBC) has made its… Read more: BBC Looking at Developing AI LLM - Eldertech for an Ageing Society

What is Eldertech? As the global population ages, the significance of elder technology, or Eldertech, is increasingly evident. Businesses are now focusing on digital transformation… Read more: Eldertech for an Ageing Society

What is Eldertech? As the global population ages, the significance of elder technology, or Eldertech, is increasingly evident. Businesses are now focusing on digital transformation… Read more: Eldertech for an Ageing Society - United Nations AI Resolution

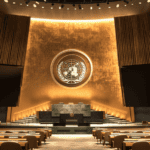

Historically, artificial intelligence has been unregulated and free to grow without constraint. Despite its numerous benefits, many have expressed concerns about this emerging technology’s potential… Read more: United Nations AI Resolution

Historically, artificial intelligence has been unregulated and free to grow without constraint. Despite its numerous benefits, many have expressed concerns about this emerging technology’s potential… Read more: United Nations AI Resolution - What is The EU AI Act?

Understanding the AI Act Artificial Intelligence (AI) has rapidly become integral to various sectors, from healthcare and finance to transportation and entertainment. However, its widespread… Read more: What is The EU AI Act?

Understanding the AI Act Artificial Intelligence (AI) has rapidly become integral to various sectors, from healthcare and finance to transportation and entertainment. However, its widespread… Read more: What is The EU AI Act? - Chat GPT-5 Release Date 2024

OpenAI’s GPT-5 Release The artificial intelligence (AI) landscape is set for a significant shift as OpenAI, the pioneering AI company led by Sam Altman, gears… Read more: Chat GPT-5 Release Date 2024

OpenAI’s GPT-5 Release The artificial intelligence (AI) landscape is set for a significant shift as OpenAI, the pioneering AI company led by Sam Altman, gears… Read more: Chat GPT-5 Release Date 2024